About CQE

What Is CQE?

The Common Quality Enumeration (CQE) is a formal list of quality issues and the supplemental aspects that define them. The CQE is an effort to:

- Serve as a common language for describing facets of "quality" such as characteristics, measurable attributes, established guidelines and practices, testing, and potential consequences due to lack of quality

- Serve as a standard set of tools, references, and methods for measuring and capturing quality areas of interest

- Provide a common baseline standard for identification, mitigation, and improvement efforts for quality areas of interest

Introduction

Some Common Types of Quality Characteristics:

- Maintainability

- Functionality

- Security

- Reliability

- Performance

Within the realm of software-enabled devices and systems, there is a wide spectrum of mistakes, or weaknesses, in software architecture, design, coding, and deployment. These weaknesses can undermine the device or system's ability to do that which was intended or allow environmental disruptions, system faults, human errors, or attacks to impact the software in undesirable ways.

When this happens we say that the quality of the software in these devices and systems was not sufficient to meet the needs of the business and mission requirements. But why was this poor-quality software allowed to leave development and endanger the organization's operations and business? Often it is because the need for specific quality in the software is not communicated clearly or in a manner understandable to those that drive its development, test it, and buy it - or to those who operate it.

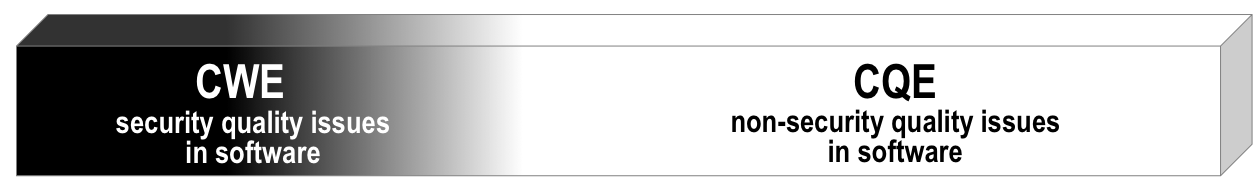

For weaknesses that tend to lead to security consequences, the Common Weakness Enumeration (CWE) effort provides a nomenclature to discuss them among and with these parties; however, many other quality aspects can impact the business and mission. For these other issues, there was no publicly available nomenclature for describing, correlating, organizing, and discussing the issues in the way that CWE provides for security-related quality issues.

The Common Quality Enumeration (CQE) effort (as indicated in Figure 1 above) aims to address this gap by creating the nomenclature for describing these "other" quality issues found in software by both collecting and organizing privately and publicly available community knowledge and crafting a publicly available repository of quality issues (other than those that lead to security impacts). This allows different teams with different tools and different concerns to leverage and learn from each other and understand one another's work, making management of quality issues (other than security) more consistent, auditable, and cost effective.

With CQE and CWE, the respective communities focused on the quality concerns they cover, now have appropriate lingua-franca for their use and maturation, as illustrated in Figure 2, above.

Background

Since the creation of the CWE repository in 2004, there has been an ongoing debate/discussion about which weaknesses belong in CWE and which do not. The distinction is whether a weakness can lead to a security-relevant issue or not. This leaves many other types of weaknesses that can cause problems with systems and impact the job the systems were intended to do.

While there have been decades (if not centuries) of discussion about the impact of quality in regard to what we do and the systems/devices we use to accomplish various tasks, there has only been broad, taxonomical categorization and groupings of these issues. There has never been an actual detailed list or enumeration and definition specifically outlining how to find them, their consequences, and mitigations to avoid or moderate their impact.

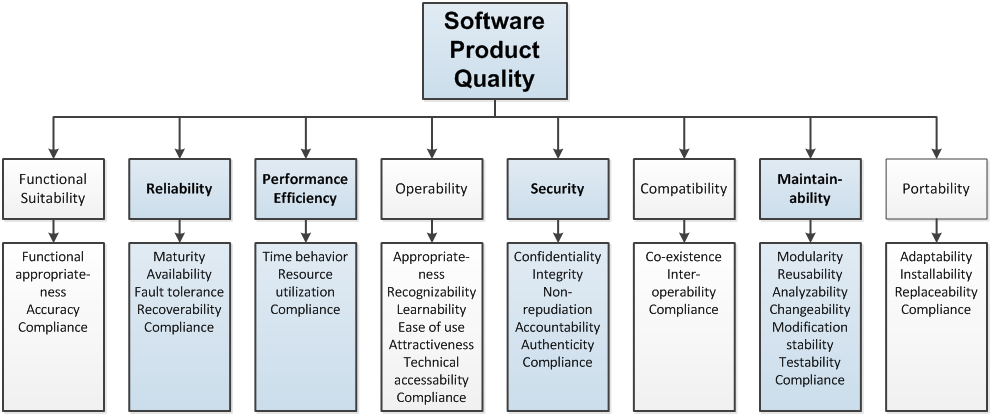

The latest example of this type of taxonomy work can be that found in ISO/IEC 25010 System and Software Quality Models, where software product quality is modeled as a composition of Functional Suitability, Reliability, Performance Efficiency, Operability, Security, Maintainability, and Portability, as shown in Figure 3 below.

MITRE has performed work and research efforts in areas of this software quality domain under the title of the Software Quality Assessment Exercise (SQAE) since 1992;1 the SQAE assessment methodology has been licensed to 13 organizations since 1992.2 Over the course of the last 23 years, the SQAE has been used by MITRE to examine the artifacts of nearly 200 systems for risk indicators in the area of software maintainability, evolvability, portability, and documentation, with security of the software added as a new element of these assessments in recent years. As documented in several papers, this work leveraged earlier efforts from Rome Air Defense Labs and industry to examine the software artifacts of a system to determine how a project addressed several quality aspects that could lead to failure or problems.3,4,5,6

Related Efforts

In 2009, the Object Management Group (OMG) and Carnegie Mellon's Software Engineering Institute (SEI) co-launched the Consortium for IT-Software Quality (CISQ) to focus on artifact-based assessment of software quality in development and maintenance. MITRE was asked to present its work in CWE and SQAE at the US launch meeting where stakeholders were identified and the specific areas of quality that CISQ would address were identified.7 Subsequently, MITRE helped drive portions of the work.

Through 2010 and 2011, a group of several researchers worked to address five areas of measurable quality, with specific attributes and metrics identified and described. The researchers then reviewed and evolved the work with the stakeholders of the CISQ community, which resulted in two white papers, one on function point measurement and the other on the quality areas of security, performance, reliability, and maintainability.8

The Architecture Driven Modernization Task Force then used these five areas within the OMG to foster the following five standards that were completed and approved as OMG standards in 2015:

- Automated Function Points (AFP)

- Automated Source Code Security Measure (ASCSM)

- Automated Source Code Performance Efficiency Measure (ASCPEM)

- Automated Source Code Reliability Measure (ASCRM)

- Automated Source Code Maintainability Measure (ASCMM)

The specific measurable attributes identified in these standards, along with the industry software quality measurement tools, provide much of the source information that the CQE team is leveraging and using for CQE.

References

- Now retitled "The Software Quality Assurance Exercise."

- https://www.mitre.org/research/technology-transfer/technology-licensing/software-quality-assurance-evaluation-sqae

- http://citeseerx.ist.psu.edu/viewdoc/download;jsessionid=63D4D09CDCE24E0C83009B7A785F7AA8?doi=10.1.1.118.9818&rep=rep1&type=pdf

- https://www.researchgate.net/profile/Robert_Martin10/publication/285403022_PROVIDING_A_FRAMEWORK_FOR_EFFECTIVE_SOFTWARE_QUALITY_MEASUREMENT_MAKING_A_SCIENCE_OF_RISK_ASSESSMENT/links/568a646c08ae051f9afa48bf.pdf

- https://www.researchgate.net/profile/Robert_Martin10/publication/242281307_Using_Product_Quality_Assessment_to_Broaden_the_Evaluation_of_Software_Engineering_Capability/links/5408747d0cf23d9765b2aec4.pdf

- https://www.etsmtl.ca/Professeurs/claporte/documents/publications/Evolving_Soft_Qual_Prof_June_04.pdf

- http://it-cisq.org/wp-content/uploads/2012/09/cisq_annual_progress_report-2010.pdf

- http://it-cisq.org/standards/

Contact Us

To discuss the CQE effort in general, the impacts and transition opportunities noted above, or any other questions or concerns, please email us at cqe@mitre.org.