Process for Building the Common Quality Enumeration Framework

Collecting Content

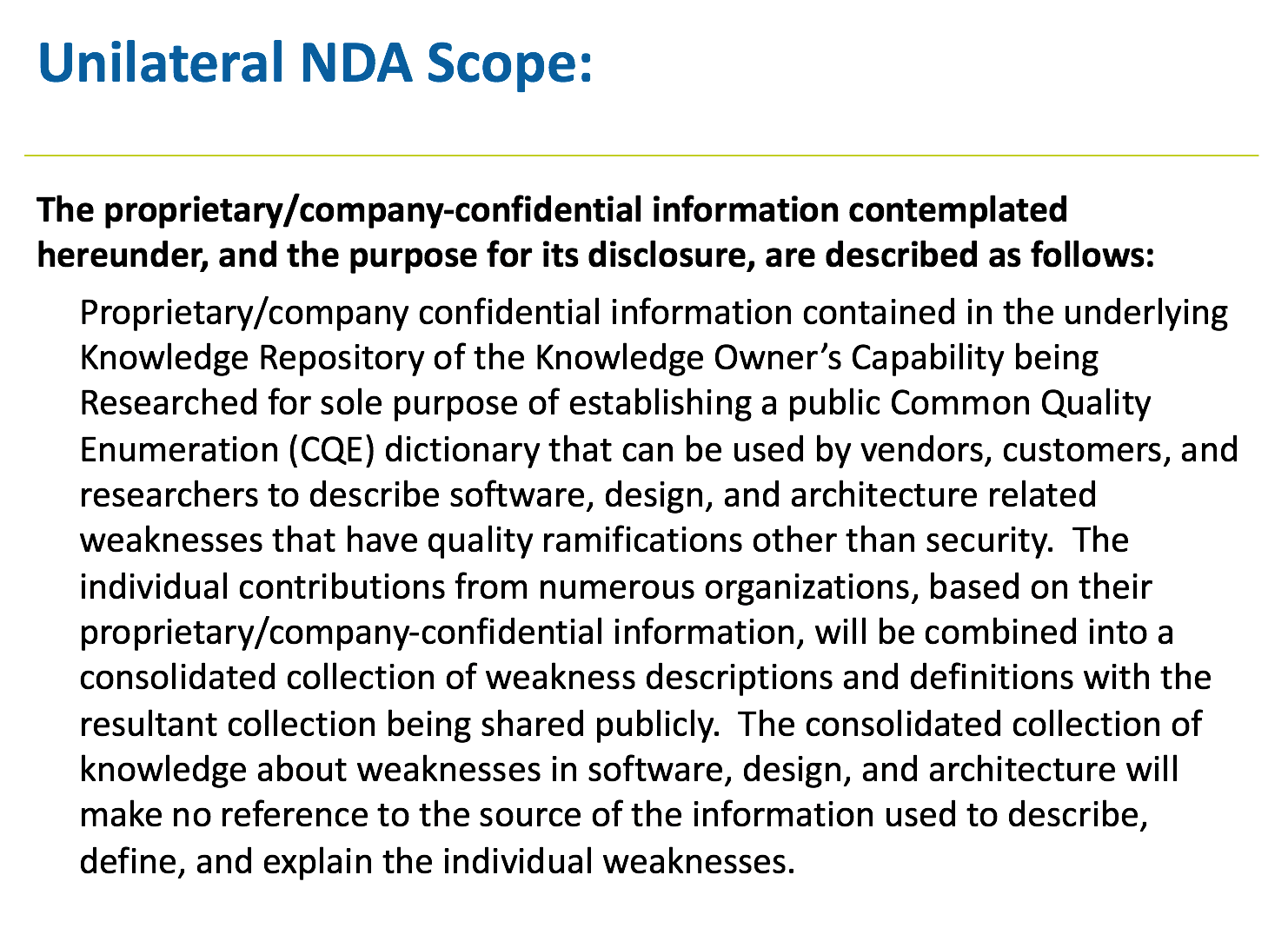

As the CQE team reached out to the community of vendors and researchers (that had tools, standards, and knowledge-bases of information about software quality issues), it prepared Unilateral Non-Disclosure Agreements (NDAs) with the specific scope (Figure 1) of allowing for the anonymization of the donated materials and synthesis of the various donations into one corpus—the Common Quality Enumeration. The CQE team used the Unilateral NDA successfully in the initial establishment of CWE and found it to be well-received by the dozen tool vendors and researchers it contacted.

With agreements in hand, the first discussions of what information was needed (as well as in what form and format) began in earnest, as did the preparation for analyzing and synthesizing the data delivered.

The team based the initial framework for the CQE on the CWE format and process and leveraged lessons learned from CWE. It made adjustments based on prior experiences with structuring a body of content such as CQE into something that can be worked on by several researchers while still supporting the needed integrity and consistency of a publicly aimed collection. The CQE team then organized the contributed content and began the task of organizing and analyzing the various materials to populate a draft version of CQE.

Analysis and Synthesis

To properly appreciate, analyze, and correlate the contributions of the various organizations that provide quality content, the CQE team first had to agree on and understand what the concept of "quality" is as a measurable property and how to organize the materials contributed.

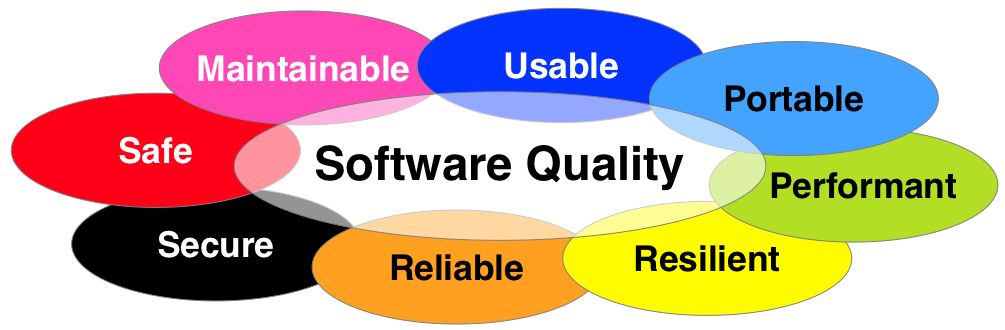

The current organizing concepts are shown in Figure 2, below.

As any review of the available research will reveal, quality is often, by its nature, subjective and context dependent. "Quality," as a noun, is a characteristic of some sort, while as an adjective, it implies the possession of certain positive attributes. To truly understand how to detect, describe, and communicate about quality, we have to understand the nature of attributes that seem to signify the presence or absence of a specific type of quality and, most importantly, how we can measure for the existence or absence of such and communicate about it.

Unlike in its previous enumeration efforts with CWE and CVE, where there had been copious previous discussion and debate about specific "issues" (whether they were specific vulnerabilities or types of vulnerabilities), MITRE has found that the tools, research, and terminology related to "software quality" are much more diffuse, less well-defined, and somewhat inconsistent across the community. As such, the development of a community-accepted and adopted CQE is likely to face more technical and social challenges than other projects such as CWE and CVE.

Conceptualizing a Quality Enumeration

Nevertheless, through much discussion, debate, contemplation, examination, and study of the donated software quality content, the CQE team has defined certain key concepts and settled on initial terms. These terms are based on extensive reviews of how such concepts/terms have been used in vendor-provided documents, online sources, and documents (such as CISQ and SQAE).

Some CQE concepts and elements are the same as those used in CWE, and anyone looking at the CQE XML or its schema will find familiar concepts, such as views, relationships, entry name, ID, description, references, and structured text. Some familiarity with CWE is assumed, but readers who need more information can review the CWE effort's glossary, which has plentiful explanations of those concepts.

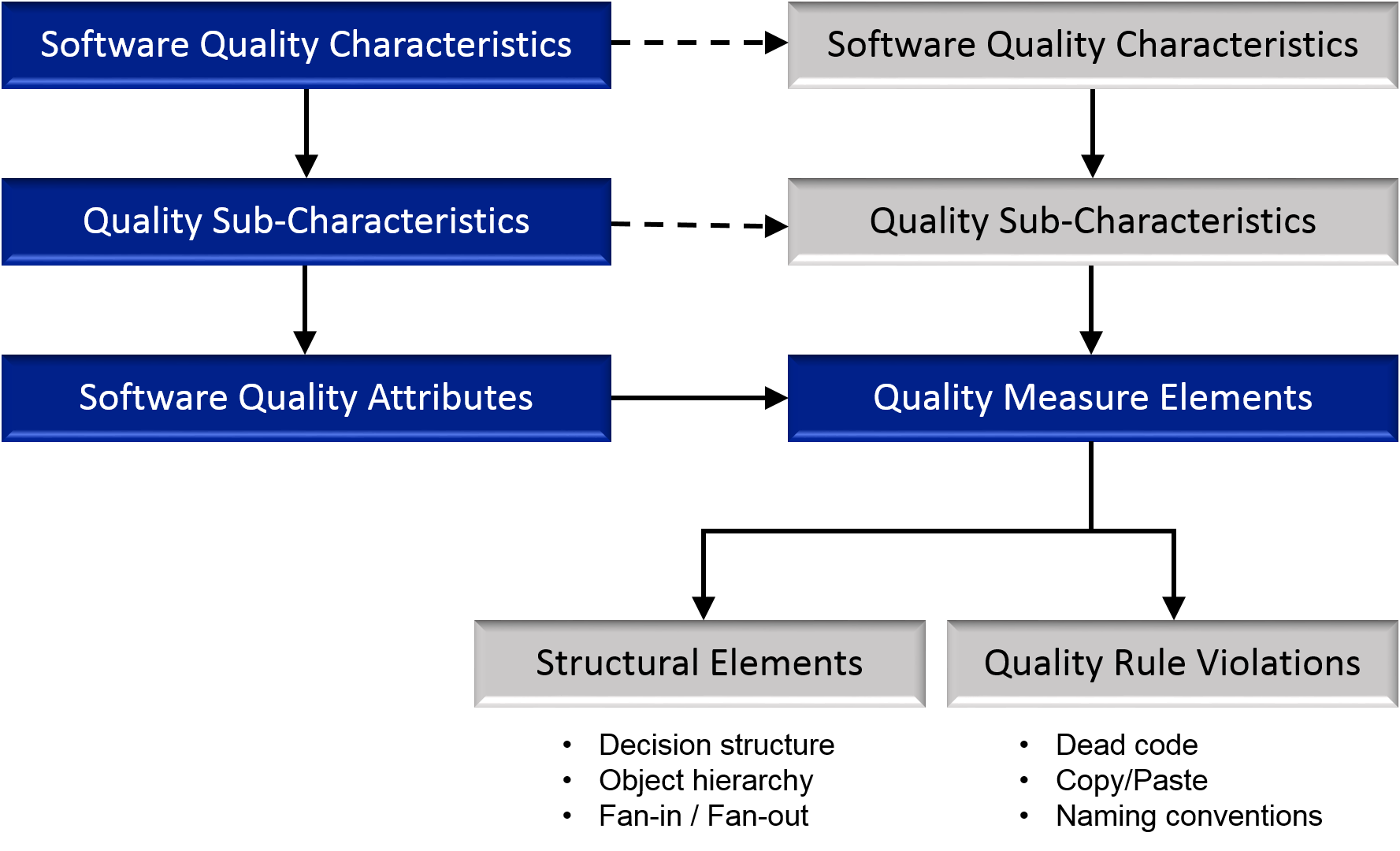

For the needs of the CQE repository, the CQE team concluded that a practical way to capture and convey the needed information was with the following four conceptual elements:

- Quality Characteristics

- Quality Attributes

- Practices

- Consequences

It should be noted that these terms are newly-defined for CQE and will remain subject to community review, discussion, and agreement, even though the first two are also used within the ISO/IEC 25010 and 15939 Framework for Software Quality Characteristics and Sub-Characteristics Measurement, as shown in Figure 3 below.

These key concepts appear in various forms in previous work on software quality by MITRE and others in the industry. The team felt that utilizing these concepts and keeping them at a high level of conceptualization will allow the needed flexibility when capturing and conveying the co-dependencies and collections of concepts needed to catalog and organize the material. This structure allows the capture of the concurrent presence of certain Sub-Characteristics and basically "defines" how measurable aspects of software quality relate to the Quality Characteristics such as Safety, Security, Reliability, Maintainability, Performance and Portability.

A Quality Characteristic serves as an overarching set of subjective areas of interest, such as maintainability, security, functionality, and other concepts that are fairly well understood in conversation. Often a Quality Characteristic is representable as a grouping of Quality Sub-Characteristics based on the quality community's collective understanding of what areas are deemed important to that characteristic. A Sub-Characteristic largely falls within the category of what something means given a certain context.

A Quality Attribute is a precise statement regarding an observable (and therefore measurable), desirable property of a software product. It is expressed in artifacts of the software such as documentation, design, implementation (i.e., code), and deployed configuration of the software, etc., independent of whether the statement can be observed by automated, manual, or mixed processes. A Quality Attribute can be identified either systematically or programmatically, for example, through a true/false analysis, objective descriptions, or traits that answer the question of what something is.

The Practices element is a collection of the various, widely adopted principles, practices, guidelines, and other "best practice" scenarios used in many Quality Sub-Characteristics, Attributes, or Consequences. Often focused around specific "practices" followed by developers, these elements are independent of whether (or how) those practices reflect "quality," "practical" ideas of quality, or well-defined guidelines, principles, practices, etc. They may be well-understood but are still too abstract to have specific, measurable attributes associated with them (roughly, a "class" of Quality Attributes). While they rarely define specific attributes that should be present, they do provide some loose guidance as to what an element should strive for to achieve quality.

The Consequences element has been called out as a library of various consequences, risk factors that may have an adverse impact on perceived quality, and all the various intricacies of consequential relationships. It is important to understand Consequences because many consequences, or risks, to the software project (such as time, effort, and money) are universal concerns, regardless of the specific purpose or function of the software.

How the four element types work in tandem depends a lot on the context and interest of the user; however, all elements are important in a full analysis of quality.

At a foundational level, the Quality Attribute is tied to and identifies the core of a Quality Issue in the software. Due to its objective nature, it implies there is some attribute that is measurable in some way. "Detection Method" is a central tenet of the Quality Attribute, as it defines a specific way to detect the presence or absence of something that would undermine a particular type of software quality.

If all conditions are met, a quality has been met or it is "of" that quality. If it falls short in some manner or does not meet a defined threshold, it does not meet the definition of that quality. Many threshold-driven baselines can simply be a high-level practice to be followed, while specific tests have a solid mathematical threshold or pass/fail value.

The Quality Characteristics and Consequence elements are more subjective in nature but critical to the discussion of quality, since they answer the question, "why do I care?" Characteristics demonstrate areas of interest so that strategies, design, budgeting, and the various aspects and activities of the software lifecycle can be monitored, explored, or focused on; whereas Consequences demonstrate the risks and costs associated with each quality area of interest. Sub-Characteristics are used to generalize and group together specific attributes by area of interest, and Consequences are often woven in complex patterns of butterfly effects that may not be known from a cursory look at the initial area of interest.

By utilizing these four top-level elements, the CQE collection will organize, capture, and standardize the expression of quality and the discussion and thought about it. In this way individuals and organizations from different communities and cultures can successfully communicate and collaborate in a manner that allows quality to be discussed, understood, and improved without needing subject matter expertise in any specific context.

As the CQE team began to capture portions of the donated content into the CQE, some additional concepts seemed appropriate for the CQE schema, for example, "maturity" (as captured by the "status" attribute in CWE). This metadata reflects how mature the CQE entry is, i.e., how complete and accurate the entry is according to CQE maintainers. The maturity options are: Preliminary, Development, Stable, and Deprecated. These options vary significantly from their equivalents in both CWE and CAPEC for various reasons. For example, CWE's status definitions were unnecessarily restrictive (only three CWE entries obtained the highest rating of "Usable," despite widespread use of CWE).

Finally, as may be surmised from the above discussion, the XML and XSD for CQE was adapted from the XML and XSD used by CWE 2.9, (namely CWE schema version 5.4.2); however, to support the newly-defined CQE concepts, new element types were defined in CQE XSD; for example, instead of <Weakness> elements as in CWE, CQE has <Quality_Characteristic>.

The CQE web-based version (like the CWE web presence) includes separate pages for views and individual entries.

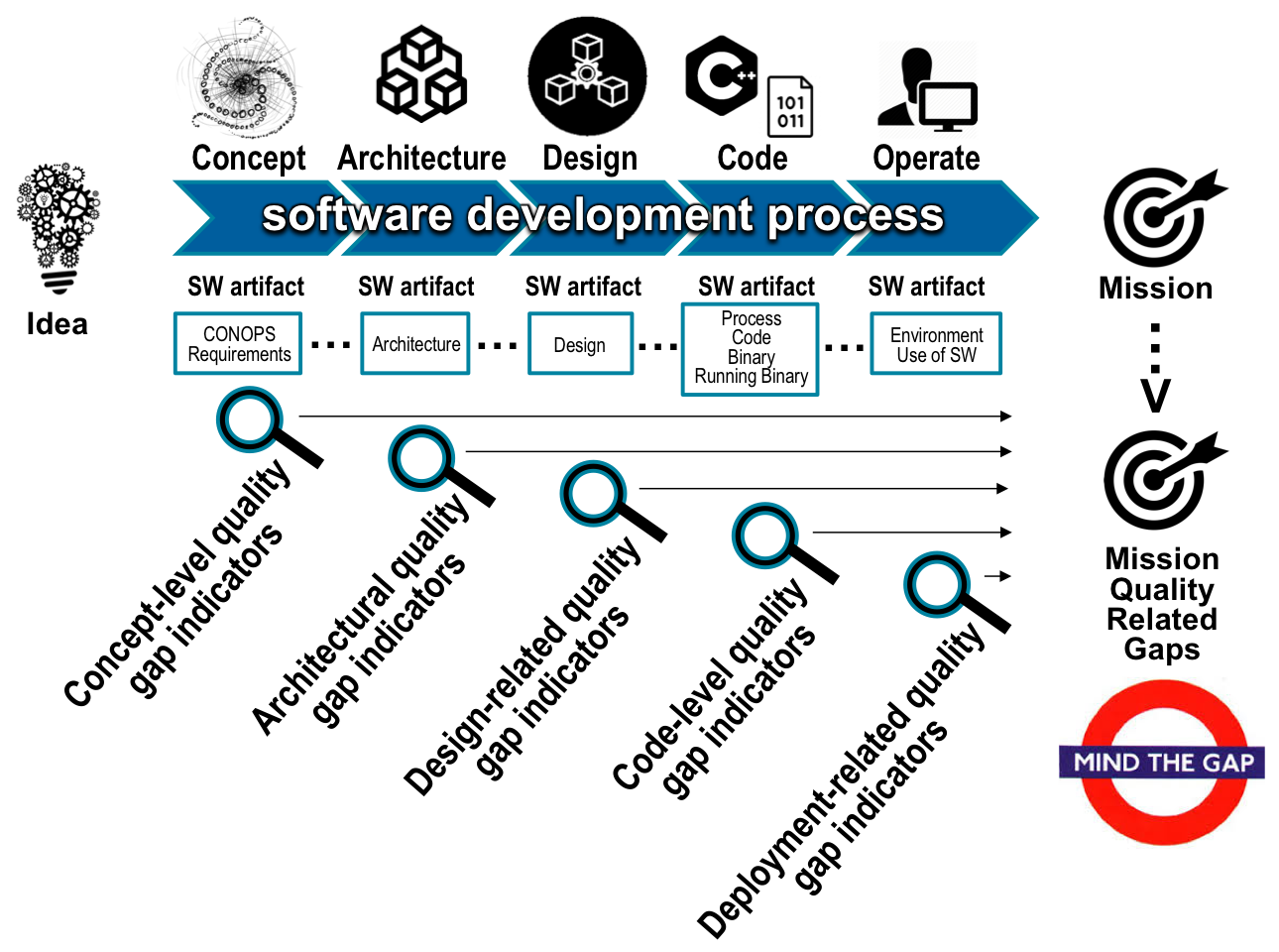

The indication that a quality issue that will impact the operational quality of the software has been introduced at one of the different phases of the lifecycle may or may not be discernable in the artifacts of that phase of the lifecycle. However, the detection that something has been introduced can only occur at a point forward from when they "appear" in the software artifacts, where software artifacts include the conceptualization of the software, the architecture, the design, the code itself, and the deployed configuration of the software when it is operational, as shown in Figure 4 below.

Populating an Initial Draft of CQE

The current version of CQE is "Draft 0.9," since CQE is not yet mature enough to be given a 1.0 version number. While many of the content donations covered many different types of quality issues (including security related ones), the initial focus of the CQE team's analysis has been on quality issues that are NOT weaknesses that could directly lead to vulnerabilities. This initial draft content is derived from OMG/CISQ material, along with entries derived from the SQAE and from a few of the donated collections which have allowed us to ensure that the schema can accommodate attributes and characteristics that are not necessarily automatically measurable.

CQE's supplementary elements (such as code examples) are incomplete at this point, which limits the general usability of CQE Draft 0.9 for the public. However, CQE Draft 0.9 provides the necessary structured content to support discussion with those that donated content and other organizations helping with a peer review of the structure and organizing approach.

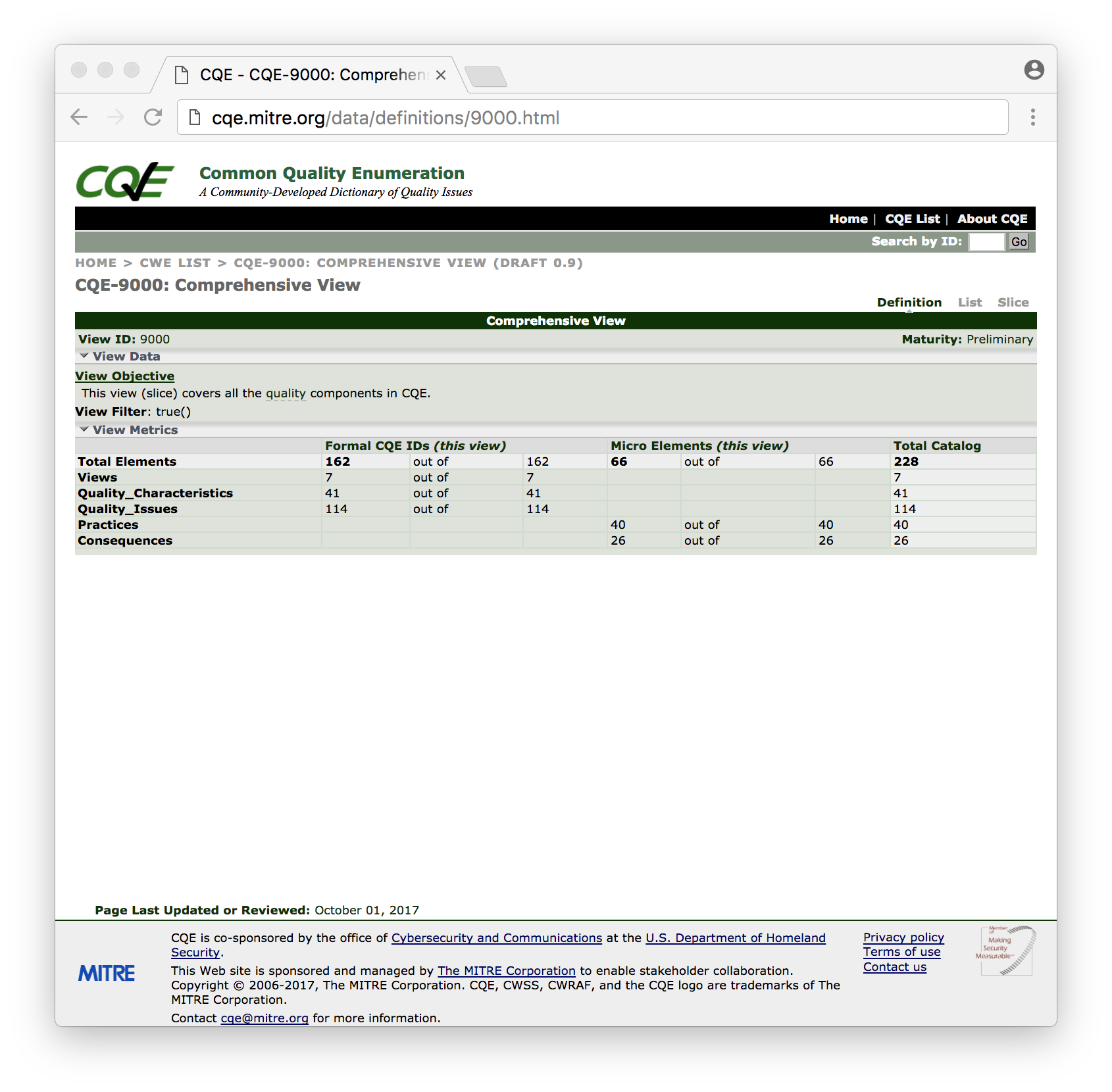

For CQE Draft 0.9, the CQE team chose to use a numeric identification (ID) scheme similar to the scheme used for CWE and CAPEC. However, to eliminate possible ambiguity and overlap with existing CWE/CAPEC IDs, CQE ID assignment began at 9000 and progressed beyond that. The nod to retired ISO quality standard 9000 means that "9000" is used as the comprehensive view of all elements.

While the CQE team was able to obtain rules for quality attributes from approximately ten sources—totaling approximately 7,000 rules—insufficient time and resources were available to do the analysis and synthesis to integrate all of these rules into CQE Draft 0.9. The CQE team attempted to apply automated keyword matching and clustering of such rules from all sources to find and merge the most commonly-used rules. The team also developed a thesaurus of equivalent terms, and a list of "stop words" (i.e., terms that should not be automatically matched, such as "the" or "quality").

Unfortunately, this effort was more labor-intensive and less productive than expected. While this automated keyword-based clustering worked well in other projects such as CVE, there was too little overlap between different CQE sources to find suitable matches based on just the word matching; that is, each source used radically different terminology and organizational structures.

However, by focusing on the idea that quality issues are very focused around operational capabilities and whether there is a gap between the delivered level of capability and the needed level we can determine what quality is not sufficient. However, understanding quality gaps at the point the system/software is operating is much later than we would prefer. Ideally, we should be identifying the possibility of the operational quality gap during the phase of the lifecycle where that quality gap potential is introduced. To do that, we want to know what we can measure about all of the artifacts produced in the creation of the software to indicate we are going to miss the required quality characteristic in the operational software/system.

With this in mind, the CQE team was able to establish an initial set of quality issues for CQE Draft 0.9.

Currently, CQE IDs have been assigned for 112 Quality Issues, 41 Quality Characteristics and 7 Views.

The other quality concepts are also part of the CQE knowledge, with 40 Practices and 26 Consequences. While these CQE micro elements are given numeric IDs, only the Quality Issues, Characteristics and Views equate to formal CQE entries and are assigned CQE IDs.

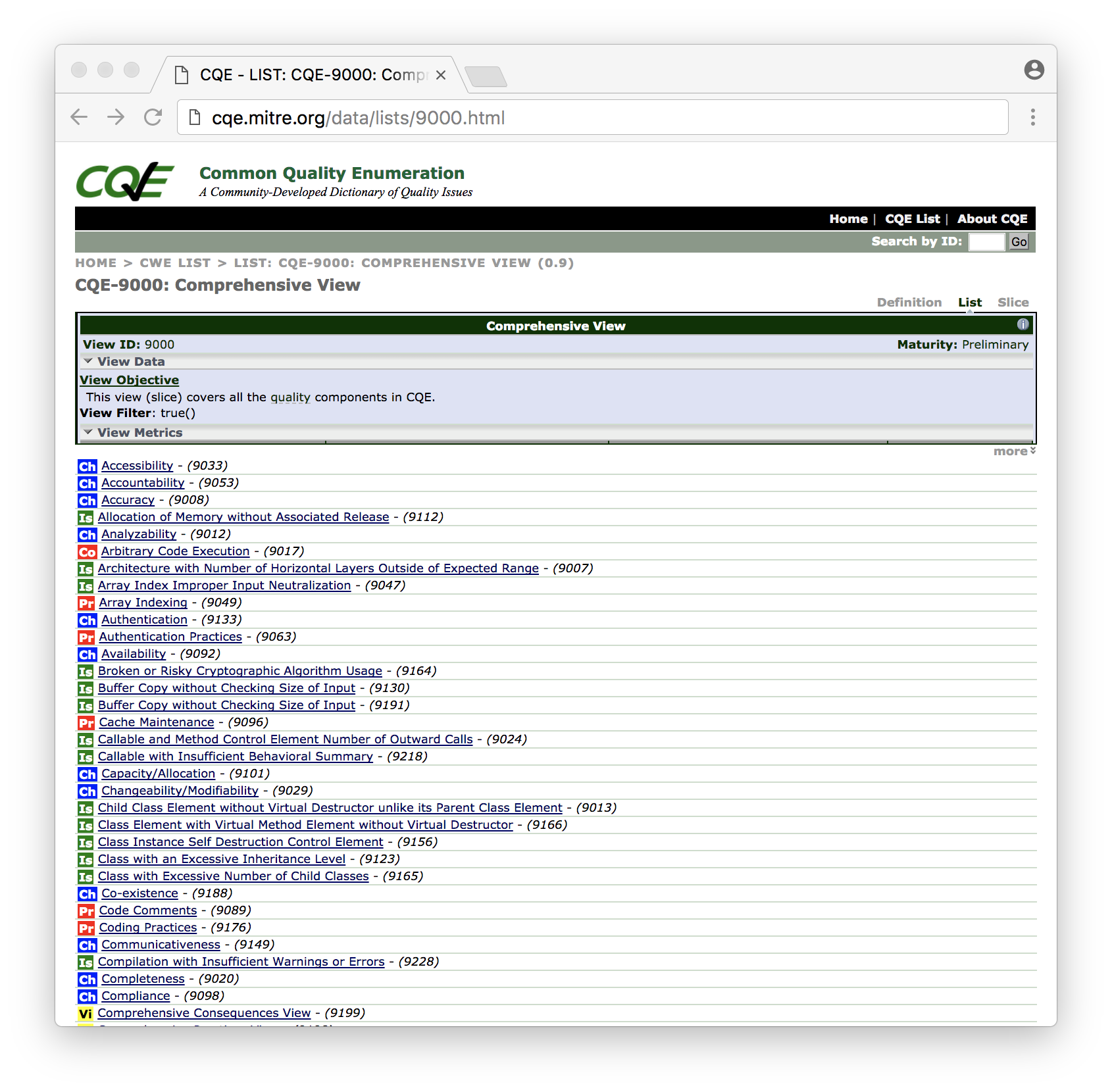

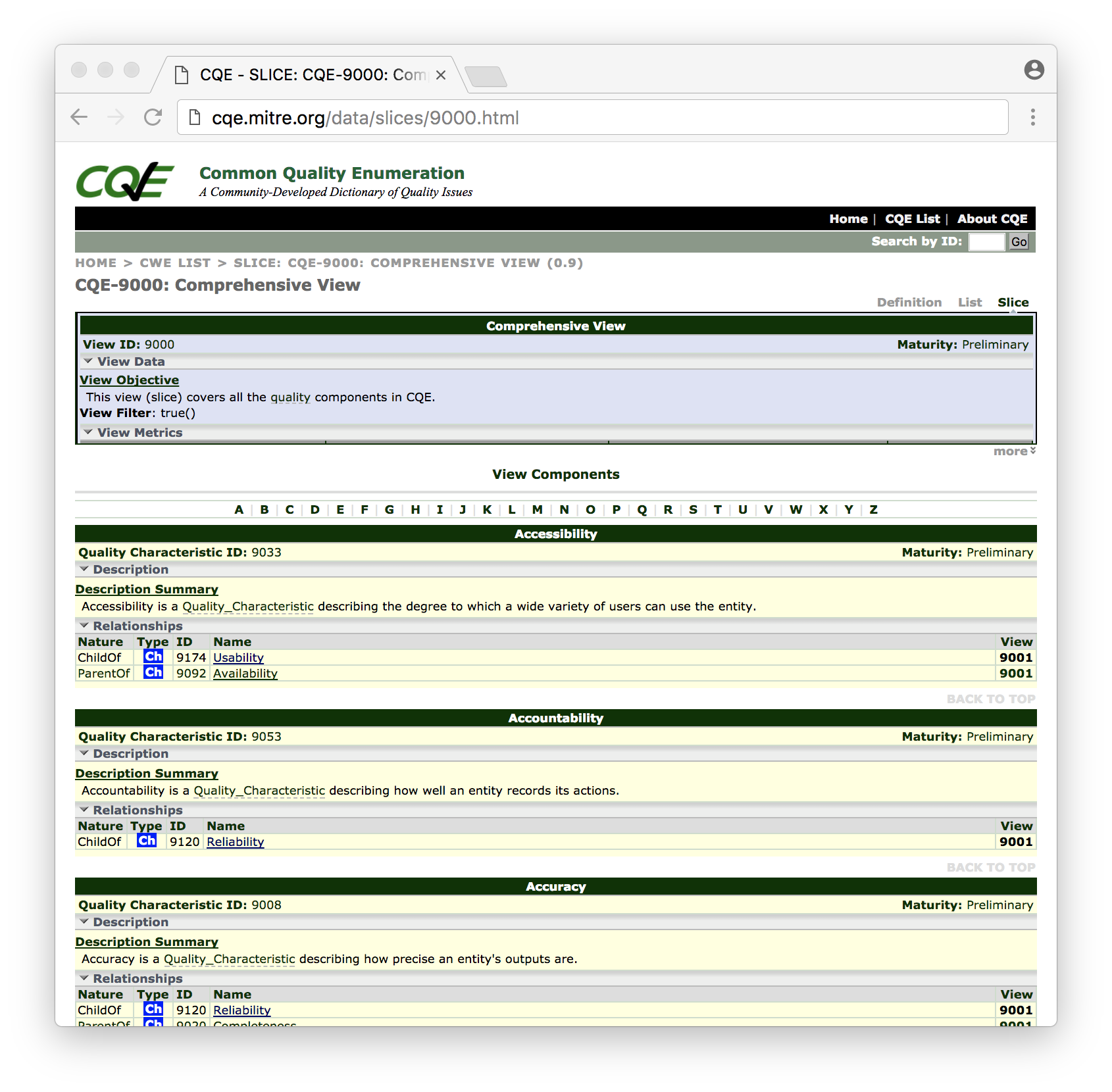

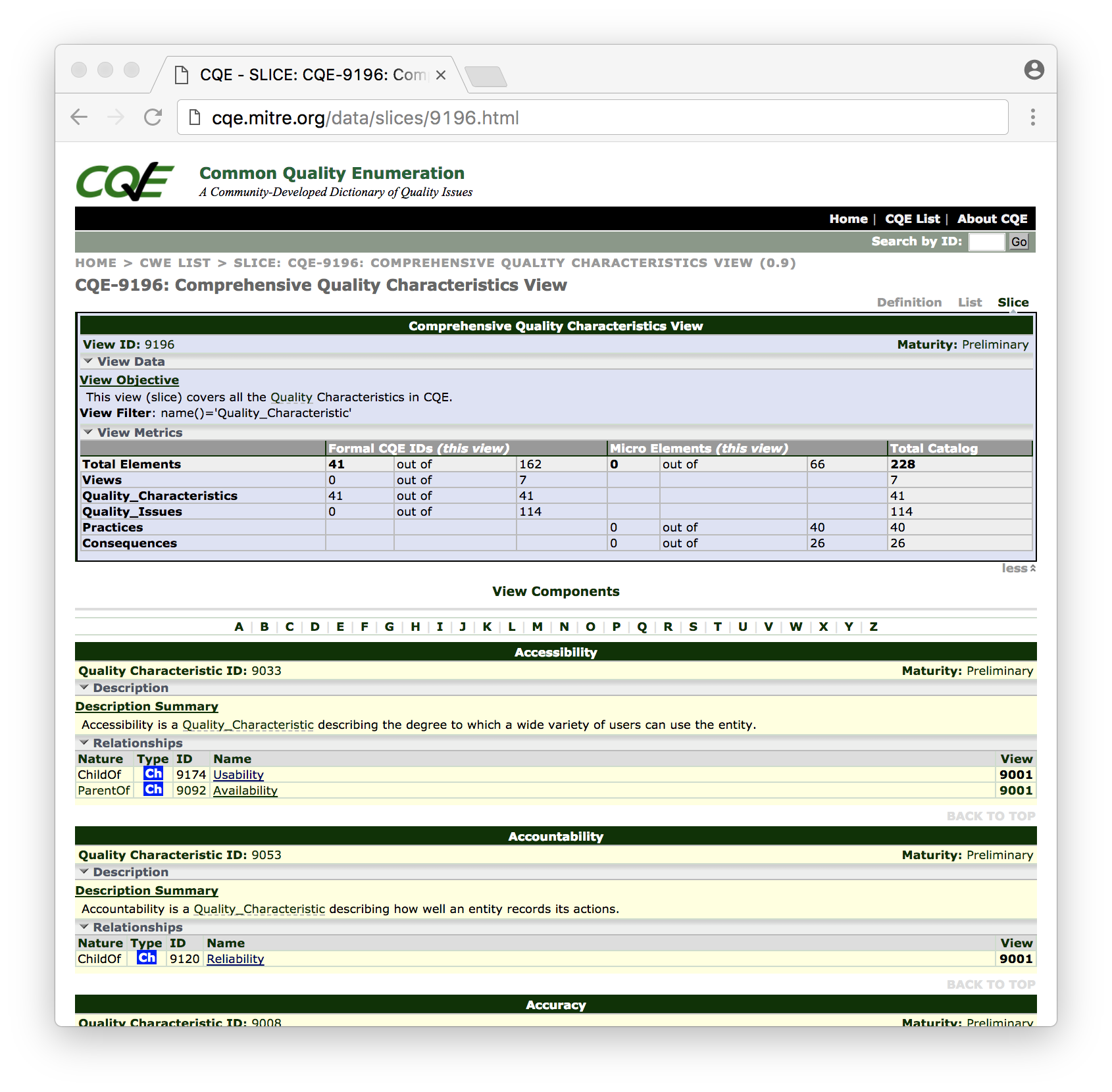

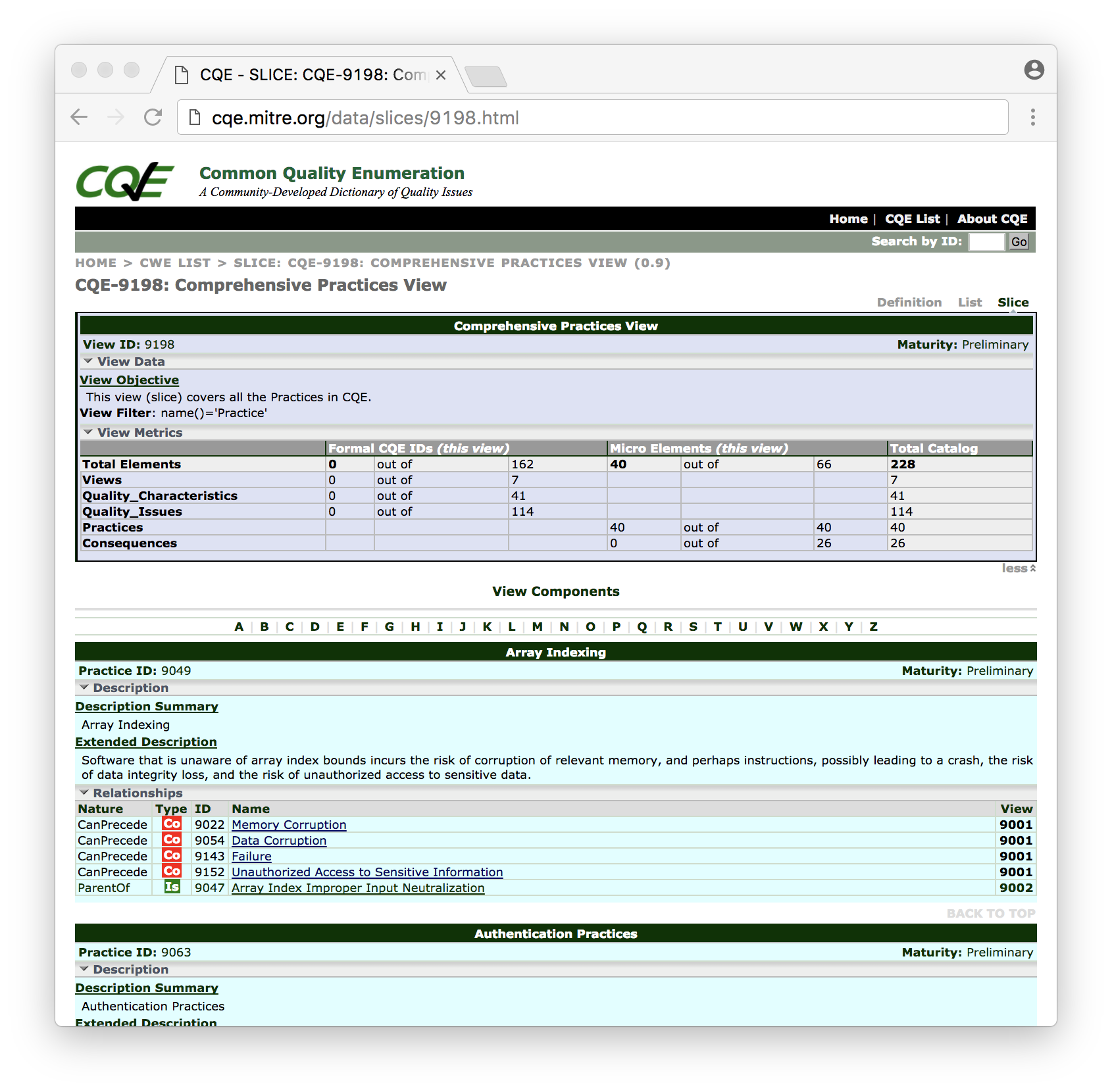

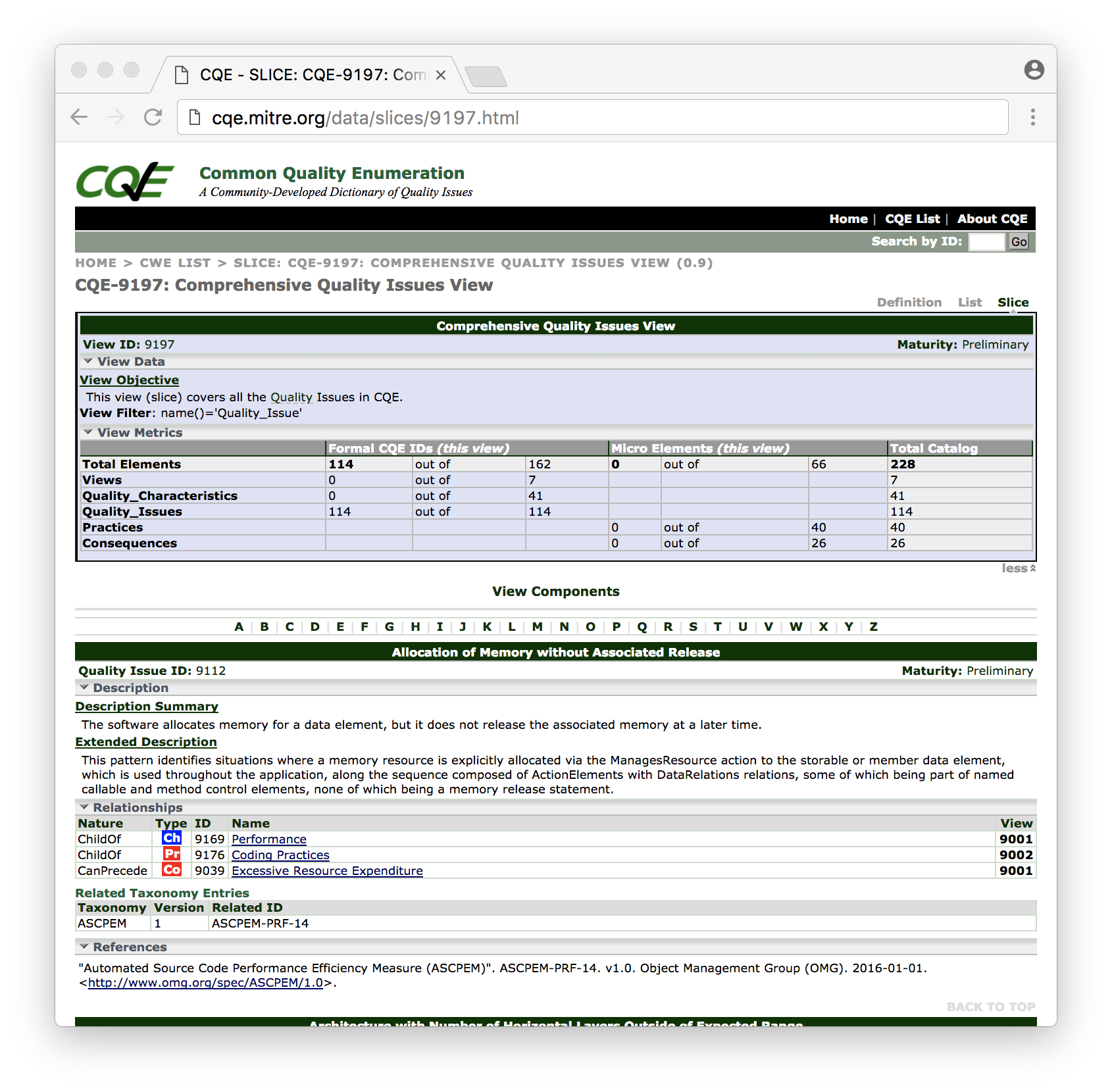

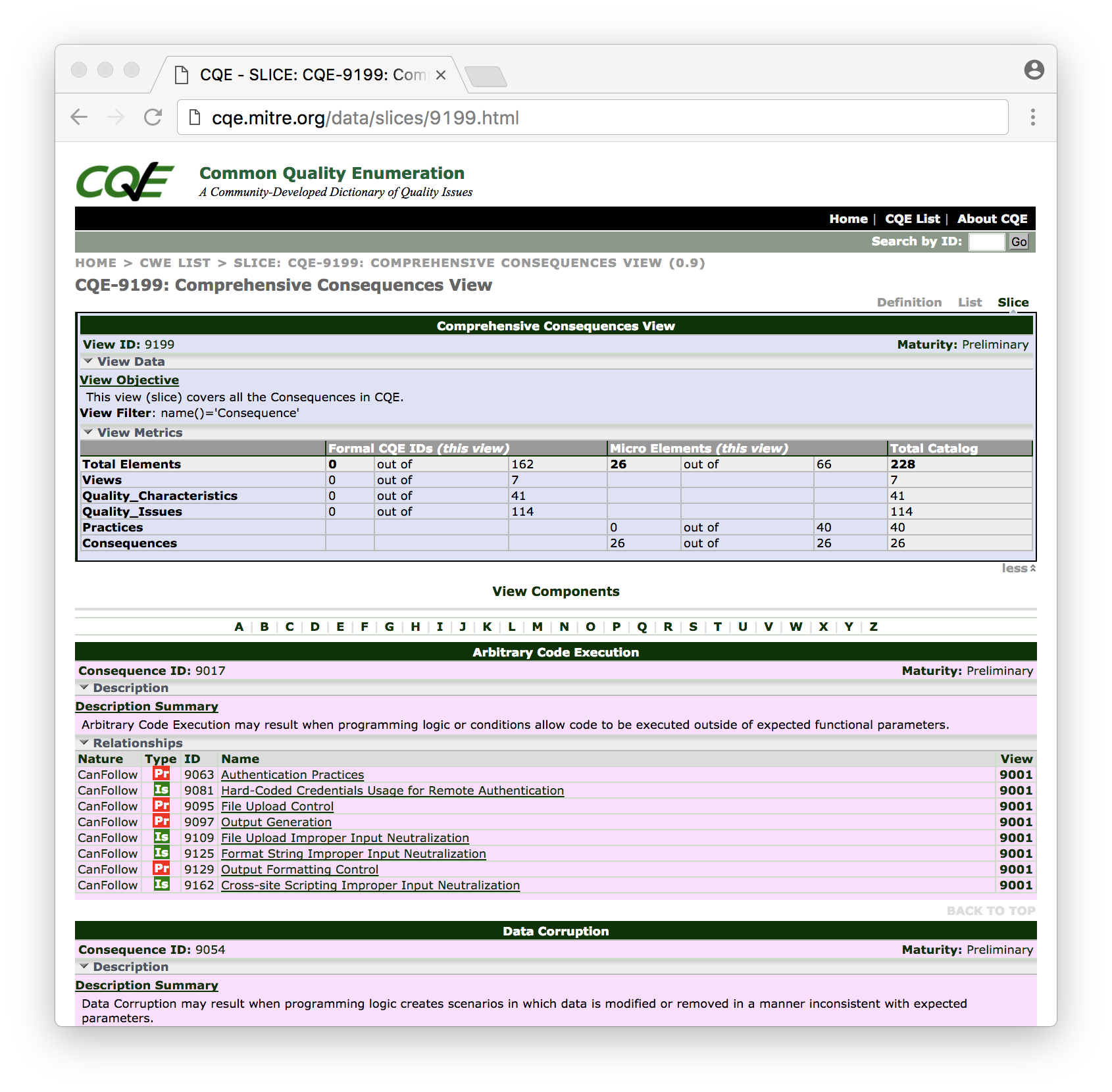

For views, there are presentation modes of "Definition," "List," and "Slice," as shown in Figures 5, 6 and 7 below.

Examples of the web presentations of four Views currently defined are shown above and below in Figures 8-11, including Quality Characteristics View (ID 9196), Practices View (ID 9198), Quality Attributes View (ID 9197), and Consequences View (ID 9199).

Future Work: Tasks, Questions, and Challenges

This section describes the future work that is needed to realize CQE.

Create New and Revised Content

One of the future tasks to evolve CQE will be to develop, refine, and synthesize additional CQE content with the goal of creating a widely-available public CQE collection that can be published on a public CQE website, is logically consistent, and incorporates the majority of the donated content gathered under the Unilateral NDAs.

Such will include, but not necessarily be limited to:

- Identify and create additional entries to address gaps (automated and non-automated)

- Refine/finalize language and terminology and update definitions, titles, etc.

- Analyze and fill-in relationships where appropriate

- Prioritize additional fields that must be filled-in (e.g., code examples)

- Fill-in currently-empty fields

- Modify any copyrighted content (or clearly mark if given approval), especially for descriptions and terms used in CQE Draft 0.9

A major challenge for additional content is the scale of the effort. Approximately 7,000 rules already have been submitted by the current organizations engaged with the CQE effort; this means that extensive work is necessary to find the associated match in CQE. In addition to optimizations such as automated keyword matching, the CQE team may be able to match some data sources using cross-references, e.g., to other rule sets such as MISRA C.

To distribute the effort, the CQE will need more direct engagement with the original data contributors and other members of the community that can help evolve the information in CQE. Each contributor could then perform the mappings to current draft CQE content and suggest changes, additions, and refactoring with the CQE team reviewing the mappings and address any gaps. In the early days of CVE, a limited version of this technique was used successfully.

Additional precision for automatically-detectable quality attributes may be obtainable by explicitly reviewing the various rules for existing coding standards, such as MISRA C.

Since SQAE is currently the only source for non-automatically-detectable characteristics, additional research or consultation may be necessary to identify and fill-in additional gaps within CQE.

Review and Modify CQE Organization

With the first cut at the CQE organizational constructs, future work will also include engagement with outside groups that have knowledge and interests in this area and address a series of questions:

- Is the current organization reasonable?

- Should some concepts be more appropriately treated as XML elements instead of "CQE entries"?

- Should some types of CWE-style views be avoided?

- What views are appropriate? The only views in CQE Draft 0.9 are the comprehensive, the default-hierarchy, and the four comprehensive views of each component (characteristics, issues, practices, consequences).

The organizations that have donated material as well as others interested in this area of study, like National Institute of Standards and Technology (NIST) and SEI, as well as other subject matter experts need to help CQE evolve and attract attention from others.

Normalize Terminology

As previously mentioned, the CQE team discovered that vendors use widely varying terms for similar concepts. For example, the OMG/CISQ documents define and use a more-general term "callable" as opposed to other sources that use "function" and/or "method." A focused research effort (and/or active community engagement) is needed to identify and/or define terms that are acceptable and understandable to most stakeholders.

It should be noted that this discrepancy in terminology was also present in CWE, and it required—and still requires—concerted research and community engagement. The CWE Glossary was developed as an attempt to at least use terminology consistently within CWE and provide a reference as to how terms are defined (as they are used within CWE).

There may be opportunities to study and/or develop universal programming concepts or models.

Define and Prioritize Use Cases for CQE

A more explicit definition of use cases and stakeholders for CQE (such as developers, managers of developers, or tool vendors) could be used to prioritize the most important stakeholders and use cases, further guiding development and community engagement for CQE.

Represent and Manage Tension Among Quality Characteristics

The CQE team believes that with broad topic of quality there is potential interplay and tension among different quality characteristics; improving one characteristic could negatively impact another. For example, a change that is important to achieve high performance might adversely impact its maintainability or portability.

Some open questions regarding this quality tension are:

- How can this interplay be best represented in CQE?

- Is it feasible to represent all the potential interplay? It may be that the potential chaining relationships are so numerous that they reduce usability.

- How can a CQE user who cares about one particular Quality Characteristic focus only (or primarily) on that specific CQE element? How should CQE support the user in discovering the interplay with other Characteristics and at what level?

Miscellaneous Discussion Points

The following additional questions and issues have arisen:

- How can CQE unify relationships among Quality Characteristics when these types of relationships can vary so widely across tools? For example, one tool might say that characteristic X is a child of characteristic Y; a different source might say that X and Y are both children of some other characteristic Z.

- What role (if any) does CWE-style Categories play, and are they different from other element types?

- Which types of elements should not be part of the CQE "ID namespace," i.e., should not receive CQE IDs? If those elements must be referenced, what should the ID scheme look like? (For example, in CWE, certain frequently-used mitigations are given their own "mitigation ID.")

- Regarding the level of abstraction, how can CQE define appropriate guidelines for choosing the level of abstraction? How deep is too deep, that is, when do quality attributes get so specific that they should be combined under a more abstract CQE entry instead of being assigned their own entries? For example, some tools have multiple rules (equivalent to CQE Quality Attributes) that are language-specific but deal with the same concept.

- How can CQE identify vendor-submitted data that maps to abstract, high-level CWE IDs but are not security related (for example, some tool-submitted data maps to high-level CWE entries, e.g., CWE-398 "Poor Code Quality")? A naive attempt to exclude such data would likely omit important Quality Attributes that should be explicitly represented at a lower level of abstraction.

- How can CQE support "measure elements," as defined and used in OMG/CISQ documents and should it? An example measure element is "number of functions with more than N lines of code"; the value of "N" may vary depending on the user and/or tool.

Contact Us

To discuss the CQE effort in general or any other questions or concerns, please email us at cqe@mitre.org.